How to improve SEO by Tackling Index Bloat and Crawl Waste

SEO has always been the practice of making a website findable, readable, and crawlable. Technical SEO was the foundation of SEO, but today, if you’re thinking how to improve your SEO, then link building and content work should also be in your focus.

Some have resolved that the tech backbone of SEO isn’t nearly as important. Maybe modern web development has outpaced the knowledge of most SEOs, and to newcomers it’s approached as an esoteric practice they can choose not to study.

But many, including myself, argue that technical SEO is more important than ever.

Google previously told us they couldn’t render certain languages like JavaScript. Since then, Google’s Gary Illyes has said, “We can now render the entire web.” On the surface, this looks like another reason technical SEO is on the way out. But those of us in the trenches know that Google still struggles with accurate crawls and renderings.

Why Accurate Google Crawls Matter

If you trust what Google says, they can render anything, virtually any codebase, but we’ve seen many cases of Googlebot missing the mark, prioritizing the wrong pages and treating important pages as valueless. Crawling everything on the internet is a costly endeavor for Google. So Google’s algorithm calls for bots to prioritize “unimportant” pages much lower. As Google crawls and identifies pages as important, they will increase their crawl budget (like “fuel” in a fleet of automobiles). If they can’t get a good crawl, or see the pages the way users see them, it’s less likely they will process important pages, thus they will be conservative with their fuel.

So, what happens when Google improperly classifies your pages as unimportant?

What if Google circumnavigates what your business considers necessary crawl paths? Or when Google’s discovery algorithms are just flat out wrong?

Well, in these cases, Google makes their bots intentionally lazy. This isn’t new, Google has been doing this for several years now.

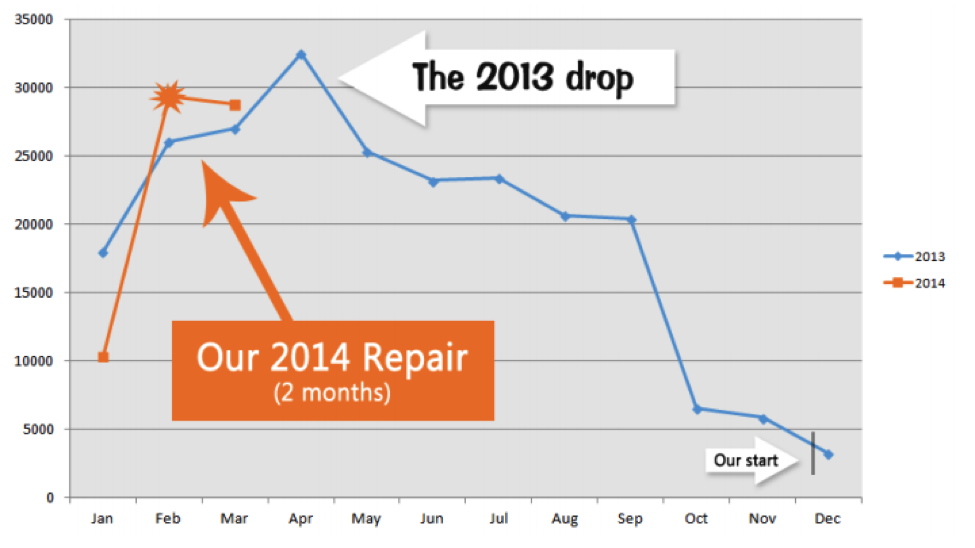

This is actual data of a client who failed to focus on their technical SEO in 2013. A technical SEO repair was the only work that returned their traffic. The duplicate URLs, index waste, and index bloat were poisoning them. After receiving several “large amount of URLs” warnings in Search Console, you can see where Google just decided to ratchet down the indexation. Maybe it was an effort to purge the index bloat, but it sure went a bit too far. This correlated to a huge drop in traffic:

No other onsite changes were made besides the addition of a new page here and there. No smoking gun, no real warning sounds, except those nagging Search Console messages. This led to our first campaign of intensive weed pulling.

Was I surprised to see such a huge bounce-back? Hell yes. But it was a learning that has driven us into today. Technical SEO matters, a lot. Don’t skimp. At Greenlane, we’re heavy on the technical capabilities, and we see these stories repeat themselves often.

So, let’s talk about some of our common focus points, notably the silent killers, index bloat and crawl waste.

Index Bloat

To avoid a purge like my example above, we always want to keep the index properly balanced. Does the Search Console report reasonably match the number of pages you actually have?

If you’re not sure how many pages your website contains, a site crawler like Sitebulb or Screaming Frog can assist. Choose to crawl the canonical versions for a truer count of your primary pages. Sometimes, if the site is large, the website’s DevOps can provide a page count based on their internal systems.

If the numbers do not align, you have some avenues to pursue:

Is the count in Search Console much higher? If so, time to pull weeds with noindex, canonical, robots.txt, and nofollow tags.

Is the count much lower? You might have a crawlability issue. Time to review your internal linking structure.

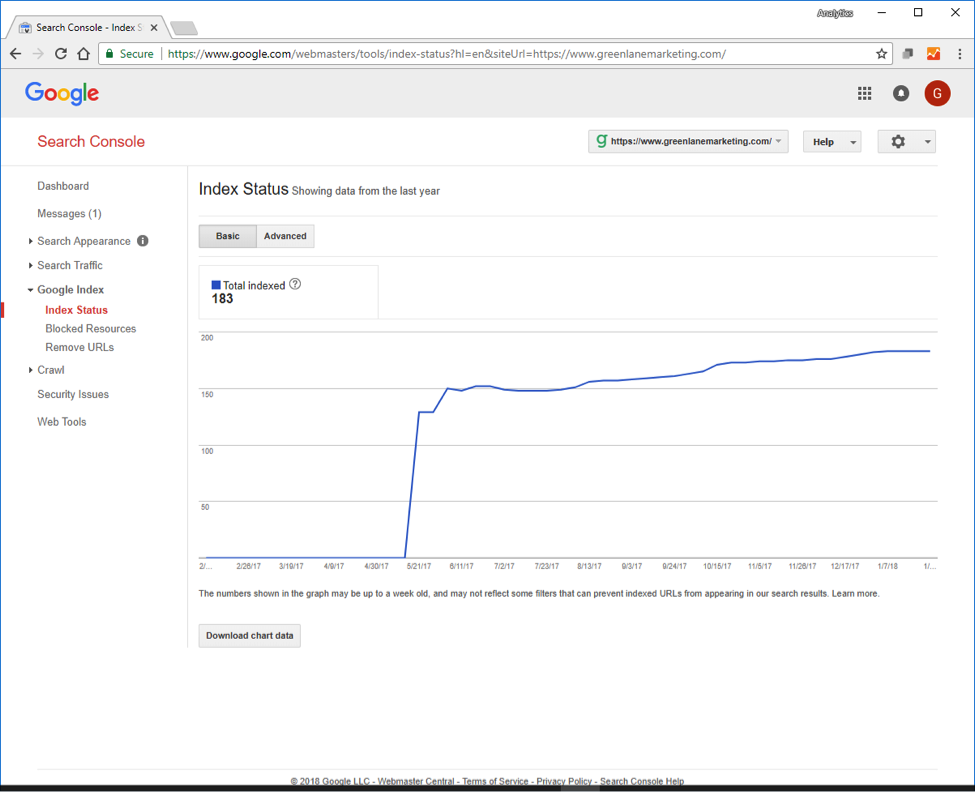

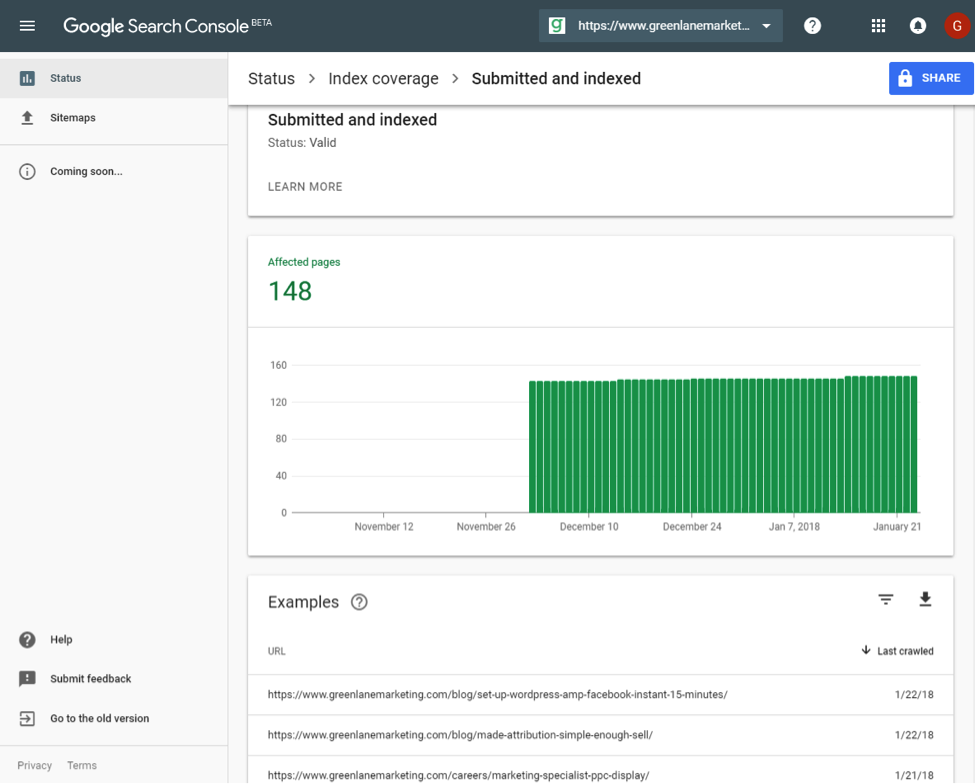

One of the biggest issues with this legacy index status report is the lack of transparency (if you’re not familiar, Google began rolling out a new Search Console interface). In our example above, we see 183 pages are indexed, but we don’t see which of our pages are the indexed ones. Out of frustration, we made an indexation checker to solve this problem, but with the new Search Console roll out, it looks like Google has made an attempt to provide that transparency. (Maybe our tool inspired the big G, though their tool does have a cap!)

Crawl Waste

Crawl waste (not to be confused with wasted crawling, which will happen after hanging out with the Pitchbox team in NYC) is allowing bots to crawl the pages they don’t need to be bothered with.

Google determines the amount of bandwidth, or fuel, they give their fleet of bots; as an SEO, you want to make sure every drop of that gas is put to good use. You want to make sure Google sees only the important stuff.

1. Don’t forget that if you want Google to see the noindex tags and canonical tags you’ve added to help with index bloat or managing crawl budget, don’t block those URLs in your robots.txt file. If you do that, Google won’t see your instructions, and you can find those pages still indexed. This is a more common problem than you might think.

2. If you have pages that aren’t being crawled enough (which you can see through your log files), take a crawl of your site and look at the number of internal links, and the position of those links (in the navigation, main body content or footer). How important are you telling the search engines that these pages are?

3. Controlling what’s crawled and indexed on your site means better user experiences, and your best content being crawled more frequently and indexed more quickly. Between server log files and the new Search Console Index Coverage and URL inspection tools, we have more data than ever before on how Google is handling your site, where budget is being wasted on the wrong pages and what’s happening with your most important pages. Use this data to your benefit, and don’t be afraid to prune bad pages when search engines are wasting time on them. A tidy, fast, efficient site is your goal.

Google doesn’t give a great breakdown of the crawl budget they assign to a site at a given time. The only way to find these answers and understand where the bots are going, is with log files.

Log files, or server logs, are a server’s report on usage. This activity includes the presence of bots, which include those from search engines. On high volume sites, these files can be enormous and messy at first look. But tools exist to distill the data into something usable. For example, using a tool like Screaming Frog’s SEO Log File Analyser, we are able to table the data into a usable form like this:

What can we learn? If a particular page is not ranking well, and you think it should be performing better, maybe it’s not getting a high visit rate from Googlebot. There’s a general correlation between well-crawled pages and rankings, so a focus could be put on internal linking to this particular page. Or maybe you have a site error or redirect chain that is dampening Google’s interest in visiting the site.

Crawl waste can be a serious issue for medium to large websites. Using Google Search Console beta’s URL inspection tool, you can see the last crawl date of key URLs and landing pages across the website. This can then be compared to the last cache date (which is also the last time Google indexed the page). If you’re noticing that your key landing pages haven’t been crawled in a while, you may have issues.

You can also use Google Search Console’s crawl stats report to run a very basic formula:

(number of pages found in a site: search of root domain) ÷ (average pages crawled per day in GSC) = N

If the value of N is greater than 10, this is typically an issue – it means Google is taking 10 days (or more) to crawl your website, if it’s less than 5, then there isn’t much of a problem. However if N comes back as a reasonable number like 4, yet from looking at last crawl and last cache dates it’s been a lot longer than 4 days since key pages have been crawled and index refreshed, then that’s a sign you have crawl wastage issues.

We know that there are correlations between Google being able to effectively crawl a website and its maximum potential performance within organic search results. Through this method you’ll be able to identify crawl issues for key pages and use data to help justify a business for the resources to resolve them.

In all my years as an SEO, I have literally never done a technical analysis where I did not see ways to improve the site. Crawl waste on the surface may seem like something only very large sites have to be concerned with, but when you start digging into the details, it can often be a red herring for bigger problems with duplication or canonicalization, even on small sites.

Final Thoughts

Technical SEO is complicated. It takes patience and a technical aptitude, but shouldn’t be avoided if you lack either. Technical SEO is alive and well, and is very important in your Search Engine Optimization mix. Despite the maturation of websites in 2018, there are still plenty of platforms that actually provide more of a technical SEO disservice than not. Google, to a degree, has taken on the burden to understand even the worst built websites in order to find the best content for their users, but the job of an SEO has always been to hold Google’s hand in doing this. Lift the hood, jump in, and get your hands dirty.